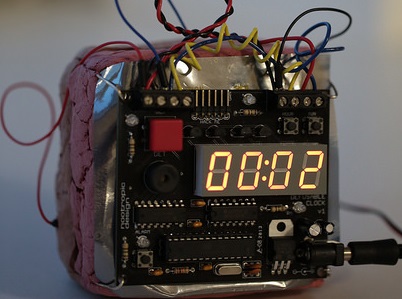

Every organization has at least one. The black box in the corner, that few understand, and even fewer that want to touch. It hums along for years powering the critical parts of the business. It contains the crown jewels of your enterprise. When it goes down, so does your business, but is it a time bomb waiting to go off?

If you talk to any vendor or consultant, the answer would be yes followed by a pitch towards their cloud migration strategy regardless of whether it is a good fit. The project will start, some components may get ported over, and then you are left with an even bigger mess then when you started several years ago in the form of technical debt that makes everything more brittle, and the cost of maintaining two systems instead of one.

Over the past several years, API driven architecture has taken off with microservices leading the charge as the primary building blocks. It makes sense as technologies like AWS Fargate and Azure’s App Service make it easy to bring up microservices, and combined with their respective API gateways, scale when needed. Kubernetes provides the ultimate container orchestration platform, and is available not just on-prem, but on all major cloud providers. With the correct underlying architecture, this will allow your organization to move at blazing speed and scale out at a time when hardware has reached the pinnacle of speed. With this benefit comes much greater complexity from setting up the infrastructure to build, deploy, run, and debug. Additionally, one of the tenants of the microservice architecture is localizing data to the service. This poses its own problems from data duplication to interdependencies between microservices where a fault in one service can spread to bring the rest of the infrastructure down.

What does this have to do with my legacy system in the bowels of the datacenter? Depending on the application and hardware, it may not run on an architecture that is common to the current cloud providers of Intel’s x86 or ARM. Additionally, the underlying data maybe too large to migrate into the cloud. Lastly, it may communicate over a protocol that is not HTTP based. Building out an API interface in front of your legacy system, whether it talks natively to the application or underlying data, is one approach that can extend the usable life of your legacy application.

One recent example we’ve done is a custom legacy application handling account management and provisioning between Oracle DB and a legacy identity access management (IAM) solution. The client application leveraged both products for user management, but ran into issues with their IAM reaching the end of it’s service life and the underlying hardware failing. In this case, we examined the dependencies, analyzed the communication between the their related internal applications and the two vendor products. Lastly we created an interface to act as an interface where the changes would get published, and depending on where a change originated, a resulting action would be triggered between their Oracle DB, the legacy IAM solution, and their newer IAM solution. Except for the time to cut over to the new interface, they were able to stay operational, and decommissioned their old IAM solution. Changes that happened outside of their application were automatically picked up, and they were able to finish their transition with little end user impact while continuing to fully utilize their internal application. They saved hundreds of thousands of dollars per year between licensing and support fees, not to mention consolidate their solution onto virtual machines decreasing their hardware maintenance costs and time to restoration.

Ultimately, you don’t have to let your legacy system hold you back from your digital transformation efforts. Let’s discuss how to turn your legacy system from an obstacle to a strength.